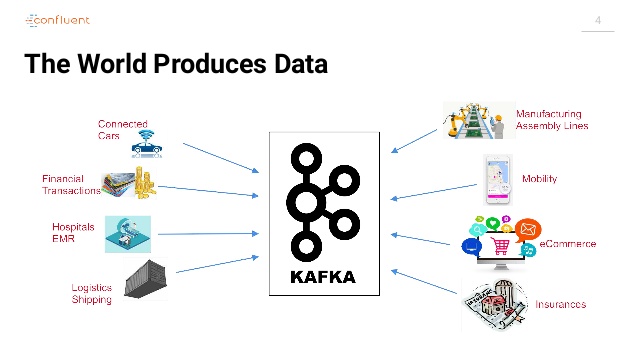

Kafka Streams is a client library used to process and analyze data available in Kafka. The processed data can be pushed to Kafka or stream it to another external system. It combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafka’s server-side cluster technology. Kafka Streams is built upon on robust stream processing which clearly identifies event time and processing time, scaling by partitioning the topics and wells manages the state of the application. It is a lightweight library which can be easily integrated into any streaming/publishing application. The application can be executed in a standalone or in application server or in a container.

Features:

- Elastic, highly scalable, fault-tolerant

- Deploy to containers, VMs, bare metal, cloud

- Equally viable for small, medium, & large use cases

- Fully integrated with Kafka security

- Write standard Java and Scala applications

- Exactly-once processing semantics

- No separate processing cluster required

- Develop on Mac, Linux, Windows

Kafka Streams directly addresses a lot of the difficult problems in stream processing:

- Event-at-a-time processing

- Stateful processing including distributed joins and aggregations.

- Windowing with out-of-order data using a DataFlow-like model.

- Distributed processing and fault-tolerance with fast fail over.

- Rolling deployments with no downtime.

Also Apache Spark can be used with Kafka to stream the data. In case if Apache Spark is used only for this application can turn out to be complex/costlier implementation.

The goal is to simplify stream processing enough to make it accessible as a mainstream application programming model for asynchronous services. Streams is built on the concept of KTables and KStreams, which helps them to provide event time processing.

As the processing model is fully integrated with the core abstractions Kafka provides to reduce moving pieces in the architecture.

Fully integrating the idea of tables of state with streams of events and making both of these available in a single conceptual framework. Making Kafka Streams a fully embedded library with no stream processing cluster — just Kafka and your application. It also balances the processing loads as new instances of your app are added or existing ones crash. And maintains local state for tables and helps in recovering from failure.

So, what should you use?

The low latency and an easy-to-use event time support also apply to Kafka Streams. It is a rather focused library, and it’s very well-suited for certain types of tasks. That’s also why some of its design can be so optimized for how Kafka works. You don’t need to set up any kind of special Kafka Streams cluster, and there is no cluster manager. And if you need to do a simple Kafka topic-to-topic transformation, count elements by key, enrich a stream with data from another topic, or run an aggregation or only real-time processing — Kafka Streams is for you.

If event time is not relevant and latencies in the seconds range are acceptable, Spark is the first choice. It is stable and almost any type of system can be easily integrated. In addition it comes with every Hadoop distribution. Furthermore, the code used for batch applications can also be used for the streaming applications as the API is the same.

References

Leave a comment